William T. Moye

ARL Historian

January 1996

Fifty years ago, the U.S. Army unveiled the Electronic Numerical Integrator and Computer (ENIAC) the world's first operational, general purpose, electronic digital computer, developed at the Moore School of Electrical Engineering, University of Pennsylvania. Of the scientific developments spurred by World War II, ENIAC ranks as one of the most influential and pervasive.

The origins of BRL lie in World War I, when pioneering work was done in the Office of the Chief of Ordnance, and especially the Ballistics Branch created within the Office in 1918. In 1938, the activity, known as the Research Division at Aberdeen Proving Ground (APG), Maryland, was renamed the Ballistic Research Laboratory. In 1985, BRL became part of LABCOM. In the transition to ARL, BRL formed the core of the Weapons Technology Directorate, with computer technology elements migrating to the Advanced Computational and Information Sciences Directorate (now Advanced Simulation and High-Performance Computing Directorate, ASHPC), and vulnerability analysis moving into the Survivability/Lethality Analysis Directorate (SLAD).

The need to speed the calculation and improve the accuracy of the firing and bombing tables constantly pushed the ballisticians at Aberdeen. As early as 1932, personnel in the Ballistic Section had investigated the possible use of a Bush differential analyzer. Finally, arrangements were made for construction, and a machine was installed in 1935 as a Depression-era "relief" project. Shortly thereafter, lab leadership became interested in the possibility of using electrical calculating machines, and members of the staff visited International Business Machines in 1938. Shortage of funds and other difficulties delayed acquisition until 1941, when a tabulator and a multiplier were delivered.

With the outbreak of the war, work began to pile up, and in June 1942, the Ordnance Department contracted with Moore School to operate its somewhat faster Bush differential analyzer exclusively for the Army. Captain Paul N. Gillon, then in charge of ballistic computations at BRL, requested that Lieutenant Herman H. Goldstine be assigned to duty at the Moore School as supervisor of the computational and training activities. This put Goldstine, a Ph.D. mathematician, and the BRL annex of firing table personnel in the middle of a very talented group of scientists and engineers, among them Dr. John W. Mauchly, a physicist, and J. Presper Eckert, Jr., an engineer.

Despite operating the computing branch with analyzer at APG and the sister branch and analyzer at Moore School, BRL could not keep up with new demands for tables, coming in at the rate of about six a day. Goldstine and the others searched for ways to improve the process. Mauchly had come to Penn shortly after his 1941 visit with John Vincent Atanasoff at Iowa State College to discuss the latter's work on an electronic computer. In the fall of 1942, Mauchly wrote a memorandum, sketching his concept of an electronic computer, developed in consultation with Eckert. Ensuing discussions impressed Goldstine that higher speeds could be achieved than with mechanical devices.

About this time, Captain Gillon had been assigned to the Office of the Chief of Ordnance as deputy chief of the Service Branch of the Technical Division, with responsibility for the research activities of the Department. Early in 1943, Goldstine and Professor John Grist Brainerd, Moore School's director of war research, took to Gillon an outline of the technical concepts underlying the design of an electronic computer. Mauchly, Eckert, Brainerd, Dr. Harold Pender (Dean of Moore School), and other members of the staff worked rapidly to develop a proposal presented to Colonel Leslie E. Simon, BRL Director, in April and immediately submitted to the Chief of Ordnance. A contract was signed in June.

The so-called "Project PX" was placed under the supervision of Brainerd, with Eckert as chief engineer and Mauchly as principal consultant. Goldstine was the resident supervisor for the Ordnance Department and contributed greatly to the mathematical side, as well. Three other principal designers worked closely on the project: Arthur W. Burks, Thomas Kite Sharpless, and Robert F. Shaw. Gillon provided crucial support at Department level.

The original agreement committed $61,700 in Ordnance funds. Supplements extended the work, increased the amount to a total of $486,804.22, and assigned technical supervision to BRL. Construction began in June 1944, with final assembly in the fall of 1945, and the formal dedication in February 1946.

The only mechanical elements in the final system were actually external to the calculator itself. These were an IBM card reader for input, a card punch for out-put, and the 1,500 associated relays. By today's standards, ENIAC was a monster with its 18,000 vacuum tubes, but ENIAC was the prototype from which most other modern computers evolved. Its impact on the generation of firing tables was obvious. A skilled person with a desk calculator could compute a 60 second trajectory in about 20 hours; the Bush differential analyzer produced the same result in 15 minutes; but the ENIAC required only 30 seconds, less than the flight time.

During World War II, a "computer" was a person who calculated artillery firing tables using a desk calculator. Six women "computers" were assigned to serve as ENIAC's original programming group. Although most were college graduates, the "girls" were told that only "men" could get professional ratings. Finally, in November 1946, many of the women received professional ratings.

ENIAC's first application was to solve an important problem for the Manhatten Project. Involved were Nicholas Metropolis and Stanley Frankel from the Los Alamos National Laboratory, who worked with Eckert, Mauchly, and the women programmers. Captain (Dr.) Goldstine and his wife, Adele, taught Metropolis and Frankel how to program the machine, and the "girls" would come in and set the switches according to the prepared program. In fact, the scheduled movement of ENIAC to APG was delayed so that the "test" could be completed before the machine was moved.

Late in 1946, ENIAC was dismantled, arriving in Aberdeen in January 1947. It was operational again in August 1947 and represented "the largest collection of interconnected electronic circuitry then in existence."

ENIAC as built was never copied, and its influence on the logic and circuitry of succeeding machines was not great. However, its development and the interactions among people associated with it critically impacted future generations of computers. Indeed, two activities generated by the BRL/Moore School programs, a paper and a series of lectures, profoundly influenced the direction of computer development for the next several years.

During the design and construction phases on the ENIAC, it had been necessary to freeze its engineering designs early on in order to develop the operational computer so urgently needed. At the same time, as construction proceeded and the staff could operate prototypes, it because obvious that it was both possible and desirable to design a computer that would be smaller and yet would have greater flexibility and better mathematical performance.

By late 1943 or early 1944, members of the team had begun to develop concepts to solve one of ENIAC's major shortcomings -- the lack of an internally stored program capability. That is, as originally designed, the program was set up manually by setting switches and cable connections. But in July 1944, the team agreed that, as work on ENIAC permitted, they would pursue development of a stored-program computer.

At this point, in August 1944, one of the most important and innovative (and influential) scientists of the 20th century joined the story. Dr. John L. von Neumann of the Institute of Advanced Studies (IAS) at Princeton was a member of BRL's Scientific Advisory Board. During the first week of August, Goldstine met von Neumann on the platform at the Aberdeen train station and told him about the ENIAC project. A few days later, Goldstine took von Neumann to see the machine. From this time on, von Neumann became a frequent visitor to the Moore School, eagerly joining discussions about the new and improved machine that would store its "instructions" in an internal memory system. In fact, von Neumann participated in the board meeting at Aberdeen on August 29 that recommended funding the Electronic Discrete Variable Computer (EDVAC).

In October 1944, the Ordnance Department approved $105,600 in funds for developing the new machine. In June 1945, von Neumann produced "First Draft of a Report on the EDVAC," a seminal document in computer history and a controversial one. It was intended as a first draft for circulation among the team; however, it was widely circulated, and other members of the team were annoyed to find little or no mention of their own contributions. This, combined with patent rights disputes, led to several confrontations and the later breakup of the team.

The second of the great influences was a series of 48 lectures given at the Moore School in July and August 1946, entitled "Theory and Techniques for the Design of Electronic Digital Computers." Eckert and Mauchly were both principal lecturers, even though they had left Moore School to form their own company. Other principals included Burks, Sharpless, and Chuan Chu. Officially, 28 people from both sides of the Atlantic attended, but many more attended at least one lecture.

Although most "students" expected the sessions to focus on ENIAC, many lecturers discussed designs and concepts for the new, improved machine, EDVAC. Together, von Neumann's paper and the Moore School lectures circulated enough information about EDVAC that its design became the basis for several machines. The most important of these were two British machines the EDSAC (Electronic Delay Storage Automatic Computer) built by Maurice V. Wilkes at the Mathematical Laboratory at Cambridge University and completed in 1949 and the Mark I developed by F. C. Williams (later joined by Alan M. Turing) at the University of Manchester and completed in 1951 in cooperation with Ferranti, Ltd.; and one U.S. machine, the Standards Automatic Computer (SEAC) developed at the National Bureau of Standards and completed in 1950.

Meanwhile, despite the breakup on the team, BRL still had a contract with the Moore School for construction of EDVAC. It was decided that Moore School would design and build a preliminary model, while IAS would undertake a program to develop a large-scale comprehensive computer. Basic construction of EDVAC was performed at Moore School, and beginning in August 1949, it was moved to its permanent home at APG.

Although EDVAC was reported as basically complete, it did not run its first application program until two years later, in October 1951. As one observer put it, "Of course, the EDVAC was always threatening to work." As constructed, EDVAC differed from the early von Neumann designs and suffered frequent redesigns and modifications. In fact, at BRL, even after it achieved reasonably routine operational status, it was largely overshadowed by the lab's new machine, the Ordnance Variable Automatic Computer (ORDVAC), installed in 1952. Interestingly, ORDVAC's basic logic was developed by von Neumann's group at IAS.

Meanwhile, in 1948 after reassembly at APG, ENIAC was converted into an internally stored-fixed program computer through the use of a converter code. In ensuing years, other improvements were made. An independent motor-electricity generator set was installed to provide steady, reliable power, along with a high-speed electronic shifter, and a 100-word static magnetic-core memory developed by Burroughs Corp.

During the period 1948-1955, when it was retired, ENIAC was operated successfully for a total of 80,223 hours of operation. In addition to ballistics, fields of application included weather prediction, atomic energy calculations, cosmic ray studies, thermal ignition, random-number studies, wind tunnel design, and other scientific uses.

Significantly, the Army also made ENIAC available to universities free of charge, and a number of problems were run under this arrangement, including studies of compressible laminar boundary layer flow (Cambridge, 1946), zero-pressure properties of diatomic gases (Penn, 1946), and reflection and refraction of plane shock waves (IAS, 1947).

The formal dedication and dinner were held on February 15, 1946 in Houston Hall on the Penn Campus. The Penn president presided, and the president of the National Academy of Sciences was the featured speaker. Major General Gladeon M. Barnes, Chief of Research and Development in the Office of the Chief of Ordnance, pressed the button that turned on ENIAC. To commemorate this event, on February 14, 1996, Penn, the Association for Computing Machinery (ACM), the City of Philadelphia, and others are sponsoring a "reactivation" ceremony and celebratory dinner. As part of the ACM convention, ARL will sponsor a session on Sunday, 18 February, to present the story of Army/BRL achievement. One of the speakers will be Dr. Herman H. Goldstine.

SIARAN RADIO ONLINE

![]() Pilih Siaran radio anda

Pilih Siaran radio anda

klik "STOP" untuk hentikan siaran radio. Semoga terhibur.

Monday, 20 October 2008

ENIAC: The Army-Sponsored Revolution

History of C++ Programming Language

In 1963 the CPL (Combined Programming language) appeared with the idea of being more specific for concrete programming tasks of that time than ALGOL or FORTRAN. Nevertheless this same specificity made it a big language and, therefore, difficult to learn and implement.

In 1967, Martin Richards developed the BCPL (Basic Combined Programming Language), that signified a simplification of CPL but kept most important features the language offered. Although it too was an abstract and somewhat large language.

In 1970, Ken Thompson, immersed in the development of UNIX at Bell Labs, created the B language. It was a port of BCPL for a specific machine and system (DEC PDP-7 and UNIX), and was adapted to his particular taste and necessities. The final result was an even greater simplification of CPL, although dependent on the system. It had great limitations, like it did not compile to executable code but threaded-code, which generates slower code in execution, and therefore was inadequate for the development of an operating system. Therefore, from 1971, Dennis Ritchie, from the Bell Labs team, began the development of a B compiler which, among other things, was able to generate executable code directly. This "New B", finally called C, introduced in addition, some other new concepts to the language like data types (char).

In 1973, Dennis Ritchie, had developed the basis of C. The inclusion of types, its handling, as well as the improvement of arrays and pointers, along with the later demonstrated capacity of portability without becoming a high-level language, contributed to the expansion of the C language. It was established with the book "The C Programming Language" by Brian Kernighan and Dennis Ritchie, known as the White Book, and that served as de facto standard until the publication of formal ANSI standard (ANSI X3J11 committee) in 1989.

In 1980, Bjarne Stroustrup, from Bell labs, began the development of the C++ language, that would receive formally this name at the end of 1983, when its first manual was going to be published. In October 1985, the first commercial release of the language appeared as well as the first edition of the book "The C++ Programming Language" by Bjarne Stroustrup.

During the 80s, the C++ language was being refined until it became a language with its own personality. All that with very few losses of compatibility with the code with C, and without resigning to its most important characteristics. In fact, the ANSI standard for the C language published in 1989 took good part of the contributions of C++ to structured programming.

From 1990 on, ANSI committee X3J16 began the development of a specific standard for C++. In the period elapsed until the publication of the standard in 1998, C++ lived a great expansion in its use and today is the preferred language to develop professional applications on all platforms.

C++ has been evolving, and a new version of the standard, c++09, is being developed to be published before the end of 2009, with several new features.

What good is a computer without Software?

Paul Allen and Bill Gates had written and sent the letter using letterhead they had created for their high school computer company - Traf-o-Data. Bill was attending Harvard, and Paul was working in the Boston area for Honeywell. They had sent the letter - planning to do a phone followup. They soon called Ed Roberts in Albuquerque to see if he'd be interested in their Basic, (which didn't actually exist yet), and he said that he would be as soon as he could get some memory cards for the Altair so it would have enough memory to try to run Basic; maybe in a month or so.

Herein begins some of the most misunderstood facts of the microcomputer revolution, so pay close attention. Also remember that way back in the 2nd show of this series I told you that DEC minicomputers played an important role, and now we'll learn how.

Gates and Allen figured they had a 30 day window (if you'll pardon the pun) to get a version of Basic ready to run on the Altair microcomputer. But they didn't have didn't have a microcomputer to develop this with, because the only microcomputer in the world at that time was sitting in Albuquerque, New Mexico. Seems like a Catch 22 situation - but wait.

They hadn't had an 8008 processor either, which they used in their high school computer company Traf-o-Data - which measured vehicle traffic flow. So how did they program an 8008 earlier without having one?

Well, when Paul Allen was a student at WSU he had actually tried to create a simulator on the IBM mainframe there, but he wasn't familiar enough with mainframes to make it work. When they later got a summer job at a company that used DEC minicomputers, Paul was able to create a simulator of the Intel 8008 on the DEC computer. Being intimately familiar with DECs from the ground up, and having the Intel manual for the 8008, Paul had written a program on the DEC which would simulate the exact operation of the Intel chip. Then Bill Gates was able to use this simulator to write the program which ran their Traf-o-Data computer.

Having developed this software tool previously, they used it again to create a simulator on another DEC computer at Harvard, this time for the Intel 8080. The Basic language they didn't actually write from scratch. Basic had been released into the public domain, so they used bits and pieces from various dialects of different versions of Basic to come up with their own to run on the Altair. This was a frantic few weeks, while they both worked and attended school, and spent their evenings in the school's computer labs. Then, still having never touched an Altair computer, Paul Allen flew to meet Ed Roberts at MITS in Albuquerque with a paper tape of their just completed version of Basic to try out on the Altair 8800. And miraculously it worked the first time.

Finally there was usable software to make this computer really useful, and to change the world. Paul Allen quit his job and went to work at MITS. Bill Gates soon dropped out of Harvard and moved to Albuquerque too. They authorized MITS to sell their Basic as part of the Altair kit. They also retained the rights to market it themselves. A lot of controversy arose over whether it was really theirs to sell in the first place, as the boys had used government funded computers to develop their Basic on, and as Basic was in the public domain. Many of the early hackers fiercely resented this, and early copies of Altair Basic were pirated and passed from user to user.

Gates and Allen eventually formed their own company, Micro Soft - originally spelled as two words - there in Albuquerque. Within months, they were modifying their Basic to run on other early microcomputers. They got into a law suit with Ed Roberts over the rights to Basic, and eventually won. Ed Roberts sold out and retired from the industry he had started himself within a year, and is now a country doctor in Georgia. Microsoft began doing business with other emerging companies, and next week's show is titled "Send in the clones."

Networking With Ethernet

Ethernet was invented at the Xerox Palo Alto Research Center in the 1970s by Dr. Robert M. Metcalf. It was designed to support research on the "office of the future", providing a way for personal workstations to share data. One of the earlier examples of ethernet usage in theatrical lighting occurred with the introduction of the ethernet-compatible Obsession control console, unveiled at LDI92 in Dallas. The Obsession utilized ethernet to provide remote access to video displays and DMX512 outputs over a single cable. In the seven years since, nearly every lighting manufacturer has found a use for ethernet in their products, including in entry-level control consoles traditionally marketed to smaller facilities like churches and high schools. Today, ethernet technology can be found in Strand's ShowNet(TM) Electronics Diversified's Integrated Control Environment (ICE), and Colortran's ColorNet, among other applications.

The term ethernet refers specifically to a set of standards for connecting computers so that they can share information over a common wire or network. The IEEE 802.3 standard defines the electrical specifications for the types of wire and connectors that link computers together. There are other standards to define formats by which data is transmitted over the wire (TCP/IP, for example) that are also grouped under the "ethernet" umbrella.

Ethernet has several advantages over other existing communication standards. It is the established standard for networking business computers, so many qualified sources for installation, equipment and maintenance already exist. Ethernet is also a high-bandwidth communication medium. This means that a single network can carry all the data commonly required by an entertainment control system at extremely high rates of speed. Finally, an ethernet-based control system is bi-directional, which means that devices on the network can both receive instructions and report the status of the devices on the network to a central location.

Over the past several years, the cost of ethernet has dropped considerably, benefiting from the economies of scale provided by its use in the computer industry. This cost reduction can be seen in everything from cables to hubs to the ethernet card in a lighting designer's laptop computer. The availability of common ethernet hardware is another inherent advantage. Have you ever tried to find a five-pin XLR connector or DMX512 opto-splitter in Las Cruces, NM, on a Saturday? You can't. But you can buy ethernet cables and hubs at almost any computer store.

With the relative low cost and ready availability of ethernet components, entertainment lighting manufacturers and other industries have embraced ethernet with great gusto. Today ethernet is used in everything from high-end computing to amusement-park turnstiles.

A simple network configuration would consist of a number of devices (nodes) linked together by a common wire. An ethernet node is a device that can receive instructions over the network and then, based on those instructions, perform the function it is designed for. A DMX node, for example, is basically a translation box that can receive commands from a control console on an ethernet wire, translate that information into DMX512 levels, then transmit DMX512 information over another wire to a dimmer rack.

Nodes can be linked together using different cabling schemes that are determined by the type of cable used. For example, a thinnet (10base2) network connects nodes using a bus topology. This means that devices are connected in series by a single continuous cable. Each node is connected by a T connector tapped directly into the thinnet cable, and no "star" configuration is allowed. Unfortunately, thinnet cannot accommodate the higher bandwidth requirements currently called for in many markets, so its usefulness in the future is limited. The bus topology commonly used in thinnet networks is also something to consider, since a cable failure (remember, there's only one cable) will disrupt activity on the entire network.

A more fault-resistant network can be accomplished by using unshielded twisted-pair (10baseT, also referred to as UTP) cabling, connectors, and hubs. UTP cable is an eight-conductor cable that allows for a star configuration by using a network hub to connect the nodes. The network hub (sometimes called a concentrator) serves many functions. One function is to provide a central location with discrete inputs for all cables in the network to connect to. This makes UTP networks very robust, since a single cable failure will not compromise the entire network. Another function is to swap the send and receive pairs of the UTP cables to allow bi-directional communication. This pair swapping is a requirement of UTP cable conventions.

An ethernet system using UTP cable has a maximum length of 100m (328') between network devices. However, since another function of a network hub can be to act as a repeater, it's possible to double the effective network length to 200m (656'). In larger installations where very long runs are required, UTP wiring can be used in conjunction with a fiber-optic backbone to create a network that's both easy to troubleshoot and capable of covering up to 2,000m (6,560'). And for even larger networks it's possible to use a combination of multiple hubs, fiber, and UTP.

Most UTP networks currently transfer 10Mbps (megabits per second) of data; that's 40 times the bandwidth of DMX512. More importantly, UTP cabling supports 100Mbps (fast ethernet), which is currently supplanting the old 10Mbps standard. Gigabit ethernet, which is not yet in common use, will send 100 megabytes of data per second. That's fast enough to back up a standard computer hard drive in under a minute. Currently, all but the most elaborate entertainment systems easily get by using 10baseT.

Devices on a network talk to each other by sending information in "packets". A packet can be sent and/or received from any device (node) on the network. Simply speaking, a packet might contain a single data type, like DMX512 or console video, with an address tag on the front end that identifies the type of information the packet contains. Ethernet nodes monitor the data packets as they go by on the network, but only accept those specifically addressed to them. That's how ethernet networks serve different types of devices with the same cable. And since each packet goes to every device on the network (in a simple network configuration), a single message can carry information to more than one device. In more advanced networks, a network switch can be used to divide the network into smaller subnets. A switch increases the volume of information the network can handle because it helps manage the flow of data across the various subnets.

Before ethernet, most lighting system access was "hard-wired" through the control console. This forced designers and technicians to wait in line to complete their work, and posed difficult challenges when it came time to reconfigure a system for a special application. If the designer was entering cues, the control system was tied up until cueing was finished. This meant that the electricians had to wait for the designer to finish before they could begin work on their notes, adding to production time. A networked system facilitates multi-tasking on a level previously unheard of. In an industry besieged by deadlines, the ability to have many people using different parts of the control system simultaneously is fast becoming a requirement of any large-scale production.

The adoption of the DMX512 control standard answered the call for better interconnectivity, but recently the limitations of DMX512-based systems have become apparent in installations that require large amounts of distributed data. Running distributed data throughout a facility has traditionally meant pulling many different types of cable for each desired function - this is both expensive, and for most practical purposes, difficult to change once the installation is complete. Ethernet allows for a more flexible installation since it's both easy to add devices to a network and possible to reconfigure them for different functions once they have been installed.

With ethernet, gone are the days of running a half dozen drop cables for three ports of DMX out, DMX in, remote focus and dimmer feedback. In a networked environment a single cable can handle all of this data, including dimmer levels to the racks. It quickly becomes clear that the ability to remotely configure such devices to do multiple tasks would also be beneficial. On a networked system all of the devices are already physically connected via ethernet, so it's a small leap to be able to change the function of a remote port on a node. Want that DMX512 output to take DMX512 in? No problem: just log onto the network and change it.

Unlike control consoles, few dimmer racks, moving lights, or other devices currently provide direct ethernet support. However, these devices can easily be addressed on a network by using various types of interface equipment (ethernet nodes). Most lighting manufacturers offer a number of different interfaces to bridge the technology gap, each with a different selection of data outputs to talk to the dimmer racks. These interfaces can be permanently installed or portable. The most flexible systems might include a combination of installed and portable nodes, which can be connected to the ethernet system as needed.

While lighting manufacturers have agreed to make their equipment compatible with the DMX512 communications standard, they have not yet agreed on a common ethernet protocol. Currently, while there are standards for the physical components of a network and for the addressing and formatting of different types of ethernet packets, there are no standards for the contents of the packet itself. Manufacturers in the entertainment lighting industry retain their own proprietary data standards, and as a result their gear is not compatible on the same network. The Entertainment Services and Technology Association (ESTA) is working with the lighting industry to create ethernet standards that will allow interoperability.

At present, many ethernet nodes are multifunctional, providing connections for several types of control or output. They may allow functionality to a secondary control center outside the main control booth or a simple video display at the tech table. Often, nodes are installed onstage, in the dimmer room, or in a technical office. Ethernet nodes can interface with remote devices such as remote focus units, DMX512-capable moving lights, and DMX512 dimmer racks. The distribution of these signals may go around the stage, up to the catwalk, or to other remote points as they are needed. As use of ethernet matures and becomes more affordable, lighting manufacturers are designing simpler nodes with more specific functions.

One of the more common ethernet nodes is a video interface that provides remote access to the main console's video displays. The most common application is to provide console video and cue data at a designer's position, focus table, or stage manager panel. And since an ethernet node can be placed anywhere on the ethernet network, other applications are limited only by the system designer's imagination.

A focus call can use a video node and a remote focus unit right on the stage, without tying up the main console. A stage manager can monitor a cuesheet display from the main console during a performance.

Ethernet lets you create an enhanced tech table by allowing a facility to use a remote console that connects to the main console or even a personal computer over the network. In addition to providing console video and I/O, remote consoles include all or most of the buttons, faders, wheels encoders, etc. on the main console. This allows for simultaneous programming. For example, if you have a mixture of conventional and automated fixtures, one programmer can use the main console for the conventional fixtures and a second programmer can cue the automated fixtures with the remote console. Ethernet transfers the keystrokes entered at the remote console to the main console so that playback from the main console incorporates those commands into a single event. This means only one console is needed for the performance.

Installing new ethernet nodes on an existing network is a relatively straightforward process, well within the capabilities of the average electrical contractor or stage electrician. The fact that ethernet allows for such flexibility encourages system designers and consultants to think about integrating multiple control systems on a common network.

The ability to integrate theatrical control systems with architectural systems is another attractive feature to both customers and manufacturers. An entertainment system integrated with a common ethernet protocol (TCP/IP, for example) means that it's possible to have many different types of backup systems on a network and even the ability to log in from a remote location and check the status of the system.

A multiple-use facility, such as a large convention center, puts ethernet to prime use, linking entertainment and architectural systems. Consider a facility where ethernet links five Obsession II lighting control consoles with a half-dozen different Unison CMEi architectural control processors. The CMEi's can all output levels to any of the 17 Sensor SR48AF dimmer racks installed onsite. Scattered around the facility are 20 different DMX512 and video nodes. All of these devices are linked to the network via a network switch and numerous fiber-optic network concentrators.

The specification for this convention center requires the ability to patch and control any dimmer in the system from any of the multiple control consoles or the architectural system. To address the sheer scale of this convention center, it would be prudent to create a network divided into subnets. This means utilizing available network hardware (hubs and switches) and encapsulating the data into a format that the hardware can recognize. TCP/IP (the same format people use to access the Internet) can be used to route information to various parts of the facility.

In this application, it's possible to have all of the theatrical consoles competing for the same dimmers as the architectural system. As you can imagine, this presents some unique problems not found in conventional DMX512-based systems. In conventional control systems, if there are two inputs to a single dimmer in a rack, the competing levels will simply pile on to one another. Now with the possibility for up to six different inputs, well, what's a dimmer to do?

By developing an ethernet protocol that allows for multiple control systems to arbitrate who has control of what dimmer, it's possible to make large integrated control systems easier to manage for the end user. When the user boots up an Obsession II console anywhere in the facility, the console will ask what dimmers the user would like to control. The user has the option to take control of any dimmer in the system, and in doing so, gain control of lobby lights or even the dimmers that might have been previously controlled by the Obsession II down the hall. This is especially useful when a moving partition is removed and you need to combine two separate control systems into one.

The Obsession II processor then sends a message to the rest of the control systems to let them know that it now has control of a specific group of dimmers. This information is also shared with the Unison architectural system, which, in the event of a console failure, can then take control of the failed console's dimmers and set them to a predetermined level.

A convention center represents just one possible scenario where ethernet can facilitate the move toward truly integrated lighting control systems. By using available ethernet technology, a centralized control system can be created to provide seamless transition from what were previously two discrete control systems.

To be sure, ethernet has its opponents. Because it is relatively new to the entertainment industry, stage technicians, for example, will have to learn about this new networking technology. However, they may not realize how much they already know about networking that can be applied to ethernet (wiring, terminating, bus architectures) and how many more (human) resources are available when they need help. A Category Five ethernet network is the same in an office or a theatre, and the same contractors can easily be called to service both.

Some people may believe current approved Category Five ethernet wiring is flimsy and prone to damage, particularly in touring situations. It's true that ethernet cables and connectors are lightweight--that's what makes them cheaper. But even though an ethernet cable may be a little easier to damage, it's a lot easier to replace. And with ethernet, a single set of replacement cables covers all the equipment in a show. Traditional control may require four or five sets of expensive cables, multiplying the chance for damage, and the costs of keeping replacements on hand. After all, current control cables aren't immune to damage either. A forklift can crush DMX512 and ethernet cable with equal efficiency.

As we noted earlier, different companies use different data standards for their ethernet devices. For example, you can't connect an ETC console directly to a Strand dimmer rack with ethernet, even though both devices understand DMX512. However, adding a DMX node at one end or the other would make a connection possible, and ESTA is currently working on a uniform ethernet protocol to increase interoperability between products from different companies. That's how DMX became a theatrical standard, and ethernet will follow it in a few years.

In addition, some people make the wrong assumption that networking will be expensive. Generally speaking, ethernet wiring is cheaper than most other theatrical control cabling, and one ethernet cable can replace 4 or 5 other types of control networks. Today, theatrical networks do require converters to get many devices to use ethernet data, but converters are becoming more common and less expensive, and more and more devices are becoming ethernet capable. Even though small installations are still cheaper to install using traditional control, ethernet is becoming more and more economical as time passes. At some point in the not-too-distant future, ethernet could become the best answer for every application.

Modems and networks

There were apparently some early modems used by the US Air Force in the 1950's, but the first commercial ones were made a decade later. The earliest modems were 75 bps (or bits per second). That's about 1/750th of the speed of current modems, so they were pretty slow! But to early networking enthusiasts, modems were 300 bps. Then came 1200, and by 1989 2400 bps modems.

By 1994, domestic modems had got to 28.8 kilobits per second - which was just as well, because by then we were beginning to send more than text messages over the Internet. This was thought to be an upper limit for phone line transmissions. But along came the 56k modem, and a new set of standards, so the speeds continue to push the envelope of the capacity of the telephone system.

So much so that many of have moved on, into wireless networks, and into "broadband" systems, which allow much faster speeds. But modems made the first critical link between computers and telephones, and began the age of internetworking.

Another of the former Arpanet contractors, Robert Metcalfe, was responsible for the development of Ethernet, which drives most local area networks.

Ethernet essentially made a version of the packet switching and Internet protocols which were being developed for Arpanet available to cabled networks. After a stint at the innovative Xerox Palo Alto laboratories, Metcalfe founded a company called 3-Com which released products for networking mainframes and mini computers in 1981, and personal computers in 1982.

With these developments in place, tools were readily available to connect both old and new style computers, via wireless, cable, and telephone networks. As the networks grew, other companies such as Novell and CISCO began to develop more complex networking hubs, bridges, routers and other equipment. By the mid 1980's, everything that was needed for an explosion of internetworking was in place.

Early Internet - History of PC networking

Download audio version here

At the same time as the academic and research communities were creating a network for scientific purposes, a lot of parallel activity was going on elsewhere building computer networks as well.

A lot of the West Coast hackers belonged to the Homebrew Computer Club, founded by Lee Felsenstein. Lee had actually begun networking computers before the development of the PC, with his Community Memory project in the late 1970s. This system had dumb terminals (like computer screens with keyboards connected to one large computer that did the processing). These were placed in laundromats, the Whole Earth Access store, and community centres in San Francisco. This network used permanent links over a small geographical area rather than telephone lines and modems.

The first public bulletin board using personal computers and modems was written by Ward Christensen and Randy Seuss in Chicago in 1978 for the early amateur computers. It was about 1984 that the first bulletin boards using the IBM (Bill Gates/Microsoft) operating system and Apple operating systems began to be used. The most popular of these was FidoNet.

At that time the Internet technologies were only available on the UNIX computer operating system, which wasn't available on PCs. A piece of software called ufgate, developed by Tim Pozar, was one of the first bridges to connect the Fidonet world to the Internet world. An alternative approach undertaken by Scott Weikart and Steve Fram for the Association for Progressive Communications saw UNIX being made available on special low cost PCs in a distributed network.

In the community networking field early systems included PEN (Public Electronic Network) in Santa Monica, the WELL (Whole Earth 'Lectronic Link) in the Bay area of San Francisco, Big Sky Telegraph, and a host of small businesses with online universities, community bulletin boards, artists networks, seniors clubs, womens networks etc. ..

Gradually, as the 1980s came to a close, these networks also began joining the Internet for connectivity and adopted the TCP/IP standard. Now the PC networks and the academic networks were joined, and a platform was available for rapid global development.

By 1989 many of the new community networks had joined the Electronic Networkers Association, which preceded the Internet Society as the association for network builders. When they met in San Francisco in 1989, there was a lot of activity, plus some key words emerging - connectivity and interoperability. Not surprisingly in the California hippy culture f the time, the visions for these new networks included peace, love, joy, Marshall McLuhan's global village, the paperless office, electronic democracy, and probably Timothy Leary's Home Page. However, new large players such as America on Line (AOL) were also starting to make their presence felt, and a more commercial future was becoming obvious. Flower power gave way to communications protocols, and Silicon Valley just grew and grew.

PEN (The Public Electronic Network) in Santa Monica, may be able to claim the mantle of being the first local government based network of any size. Run by the local council, and conceived as a means for citizens to keep in touch with local government, its services included forms, access to the library catalogue, city and council information, and free email.

PEN started in February 1989, and by July 1991 had 3,500 users. One of the stories PEN told about the advantages of its system was the consultations they had with the homeless people of Santa Monica. The local council decided that it would be good to consult the homeless to find out what the city government could do for them. The homeless came back via email with simple needs - showers, washing facilities, and lockers. Santa Monica, a city of 96000 people at the time, was able to take this on board and provide some basic dignity for the homeless -and at a pretty low cost. This is probably the first example of electronic democracy in action.

Meanwhile, back in the academic and research world, there were many others who wanted to use the growing network but could not because of military control of Arpanet. Computer scientists at universities without defence contracts obtained funding from the National Science Foundation to form CSNet (Computer Science Network). Other academics who weren't computer scientists also began to show interest, so soon this started to become known as the "Computer and Science Network". In the early days, however, only a few academics used the Internet at most universities. It was not until the1990s that the penetration of Internet in academic circles became at all significant.

Because of fears of hackers, the Dept of Defence created a new separate network, MILNet, in 1982. By the mid-1980s, ARPANET was phased out. The role of connecting university and research networks was taken over by CSNet, later to become the NSF (or national science foundation) Network.

The NSFnet was to become the U.S. backbone for the global network known as the Internet, and a driving force in its early establishment. By 1989 ARPANet had disappeared, but the Information Superhighway was just around the corner.

Computer History

First electronic computer (1943) : the building of Colossus

By designing a huge machine now generally regarded as the world's first programmable electronic computer, the then Post Office Research Branch played a crucial but secret role in helping to win the Second World War. The purpose of Colossus was to decipher messages that came in on a German cipher machine, called the Lorenz SZ.

The original Colossus used a vast array of telephone exchange parts together with 1,500 electronic valves and was the size of a small room, weighing around a ton. This 'string and sealing wax affair' could process 5,000 characters a second to run through the many millions of possible settings for the code wheels on the Lorenz system in hours - rather than weeks.

Both machines were designed and constructed by a Post Office Research team headed by Tommy Flowers 1 at Dollis Hill and transported to the secret code-breaking centre at

Colossus (1941) : inside the machine

During the Second World War the Germans used a Lorenz encoding teleprinter to transmit their high-command radio messages. The teleprinter used something called the 5-bit Baudot code, which enciphered the original text by adding to it successively two characters before transmission. The same two characters were applied to the received text at the other end to reveal the original message.

Gilbert Vernam had developed this scheme in America, using two synchronised tapes to generate the additional random characters. Lorenz replaced the tapes with mechanical gearing - so it wasn't a genuinely random sequence - just extremely complex.

But in August 1941 the Germans made a bad mistake. A tired operator sent almost the same message again, using the same wheel settings. It meant the British were able to calculate the logical structure inside the Lorenz.

Colossus was then built to find the Lorenz wheel settings used for each message, using a large electronic programmable logic calculator, driven by up to 2,500 thermionic valves. The computer was fast, even by today's standards. It could break the combination in about two hours - the same as today's modern Pentium PC.

Colossus Mk II (1944) : a bigger better Colossus

Without the contribution of the codebreaking activity, in which Colossus played such a major part, the Second World War would have lasted considerably longer.

By the time of the Allied invasion of France in the early summer of 1944, a Colossus Mk II (using nearly twice as many valves to power it) was almost ready.

The head of the Post Office Research Team, Tommy Flowers, had been told that Colossus Mk II had to be ready by June 1944 or it would not be of any use. He was not told the reason for the deadline, but realising that it was significant he ensured that the new version was ready for June 1, five days before D-Day.

It was in the build-up to D-Day and during the European campaign that followed that Colossus proved most valuable, since it was able to track in detail communications between Hitler and his field commanders.

Top secret : the ultimate Chinese walls

Colossus weighed around 35 tonnes in Mark II form. Its 2,500 valves, consuming 4.5 Kwatts, were spread over two banks of racks 7 feet 6 inches high by 16 feet wide spaced 6 feet apart. Thus the whole machine was around 80 feet long and 40 feet wide.

This huge machine was also one of the most closely guarded secrets of the war yet required dozens of people to build, many of them outside the military establishment in the Post Office.

Tommy Flowers was one of the very few entrusted with the overall plan - and even he didn't know the full details of the German codes.

In order to ensure security, Colossus was broken down into modules - each given to a separate Post Office team at Dollis Hill. The teams were kept apart - each having no idea of the overall shape of the ground breaking machines they were creating.

The building of SIGSALY (1943) : pioneer digital telephone system

Another secret wartime computer whose existence was finally revealed many years later was SIGSALY - the secret 'scrambling' system devised to protect the security of high level Allied telephone traffic.

secret 'scrambling' system devised to protect the security of high level Allied telephone traffic.

SIGSALY - originally codenamed Project X - was also known as 'Green Hornet'. It was the first unbreakable speech coding system, using digital cryptography techniques, with one time digital keys being supplied by synchronised gramophone discs.

SIGSALY was built in the

The first priority was to protect the hotline between the Cabinet War Room bunker under Downing Street and the White House in

1. Tommy Flowers (1905-1998) : creating the colossus

Tommy Flowers built the first computer, as a codebreaking device during the Second World War.

Flowers was a Londoner with a passion for electronics. Having gained his degree in electronic engineering he went to work for the Post Office. His dream was to try to convert Britain's mechanical telephone system into an electronic one, but opinion was against him.

During the Second World War he was drafted into Bletchley Park to join the ranks of mathematicians and cryptographers who were trying to crack Germany's code system.

He used his telephone experience to turn his fellow experts' ideas into an electronic codebreaking device named 'Colossus', which was in effect the first electronic computer. By the end of the war his team had built ten machines, each one improving on the one before.Most were dismantled afterwards and the plans destroyed for security reasons.2. Reeves, Alec Harley (1902-1971) : a peaceful man with a rapid pulse

Alec Reeves devised Pulse-Code Modulation, the first digital coding system, which liberated bandwidth.

Reeves, a natural tinkerer, grew up in the Home Counties. He glided through his electrical engineering degree to take a job developing long-wave transatlantic radio communications in the 1920s. He also helped develop short-wave and microwave radio systems.

Reeves became acutely aware of the shortcomings of analogue communication and this led him to develop Pulse-Code Modulation in 1937. It was a long time before the work was fully appreciated, but in 1969 he received the CBE - and a postal stamp commemorating PCM was issued.

Reeves was peace-loving and reluctant to work on offensive weapons, so during the Second World War he developed pinpoint bombing aids, which helped reduce civilian casualties, for which he received an OBE.

He became head of research on electronic switching systems at Standard Telecommunication Laboratories until he retired. Reeves dedicated his private life to helping others, particularly in youth and community projects and rehabilitating prisoners.

Finding the pass for a .rar file

find out that it is locked with a password, which you dont have.

Cracking the password with softwares like Advanced password recovery is out

of the question bcoz it takes nearly 3 days to guess a 8 character long password.

And noone can spend that kinda time.

Today I am gonnal post a method to find out passwords for archive files which you

downloaded from file sharers like - Rapidshare, Megaupload .........

It is pretty easy, fast and doesnt require you to use any software other than a Browser.

What you need -

1. A browser (preferably Mozilla FireFox)

2. The file for which you are searching the password must be popular.

How to do it -

Lets assume that one wants to find password for this file -

- Code: Select all

http://rapidshare.de/files/5968004/Dead.To.Rights.2-RELOADED.part01.rar.html

In the Google Search toolbar in FireFox copy the name of the file, in this case - Dead.To.Rights.2-RELOADED.part01.rar and search in the default search box.

[Note that the full link to sites has been masked in order to preserve board policy]

By checkin the Actual Url one can see that

- Code: Select all

/forum/index.php?showtopic=8927&view=findpost&p=119588

is a link to a topic in a forum, and hence by clicking on the link, we are taken to a forum page.

The forum is in some other language, but it doesnt matter. On scrolling down the page one can see

the links to the file for which we need the password. And most probably the password will posted

at the end of the link list.

If it is not posted then you can try the other links or search in other search engines or you

can even add " " to the keyword and then search.

Hope this small guide was helpful to you. Now you no longer need to ask others for the

password to files.

Thursday, 16 October 2008

Ubuntu 8.04 LTS

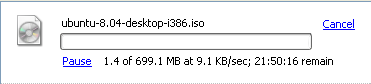

Because I’m currently downloading it, and will install Ubuntu on my second hand notebook

Because I’m currently downloading it, and will install Ubuntu on my second hand notebook  notebook is running on Pentium 3 with 1.2Ghz and 384mb of RAM, Can I run it?

notebook is running on Pentium 3 with 1.2Ghz and 384mb of RAM, Can I run it? Kiriman Dari Neraka

Very funny when something called virus sat on our computer. Ok, I can say the virus only for the Windows users ( don’t worry linux your safe) . I read about this virus through Harian Metro. You can located this virus on your local hard drive C and the file was named HELP.ME!!.html, If you opened the files another file will appears on your screen and you’ll see a popup said “Kiriman Dari Neraka”.

The virus was been detected couple month ago and started to annoying the internet users and well known as “flash.10.exe”

Beware!

I don’t know how to prevent this file from bombard other computer users, but as I knew, it will attack who open the files named HELP.ME!!.html. Always precaution with some files extension such as “.EXE”, “.VBS”, “.COM”, “.SYS” and don’t forgot documents extension, “.doc”, “.xls”, and “.zip” .

If affected?

It will slow you CPU speed and RAM also. You will noted a new files were created Flash.10.exe, msconfig.com, cmd.com, ping.com, regedit.com, aweks.pikz, seram.pkz, msn.msn.

The saffest way is?

If someone asking you how to stop it or delete it or else. Just tell them use ubuntu instead of Windows. Go to melayubuntu if you need something for your ubuntu.

Tuesday, 7 October 2008

Google AdSense: You've Got Ads!

Can I add more sites to my account?

Certainly! Once you've added the code to one website, feel free to add it to more of your sites or pages that comply with our program policies (http://www.google.com/

How can I block certain ads from showing on my site?

If you see ads that you'd prefer not to display, don't worry. You can prevent these ads from appearing on your site by using your Competitive Ad Filter to block them. Our Filter Guide (http://www.google.com/

How do I earn with AdSense?

The ads you're displaying on your site are a combination of cost-per-click (CPC) and cost-per-thousand-impression (CPM) ads. You'll get paid for each valid user click on a CPC ad and each time a user views a CPM ad. Keep in mind that you won't generate any earnings from clicking on your own ads or asking others to click, which are prohibited by our program policies.

When will I get paid?

For an overview of our payment schedule and requirements for getting paid, visit our Payment Guide: http://www.google.com/adsense_

What if my ads aren't relevant?

If you've had the code on your site for more than 48 hours, and you're still seeing public service ads or ads that aren't relevant to your site content, it might be for a number of reasons - visit our Help Center for details: http://www.google.com/adsense_

How can I help keep my account in good standing?

To start, don't click on your own ads or ask others to do so. Plus, take the time to read and comply with our full list of program policies ( http://www.google.com/adsense_

Where can I get more help?

Check out the AdSense Help Center for quick and comprehensive answers at any time: http://www.google.com/adsense_

Best of luck with AdSense!